FPGA accelerator for semantic segmentation

Accelerating Sparse Neural Networks for Semantic Image Segmentation on FPGA platforms

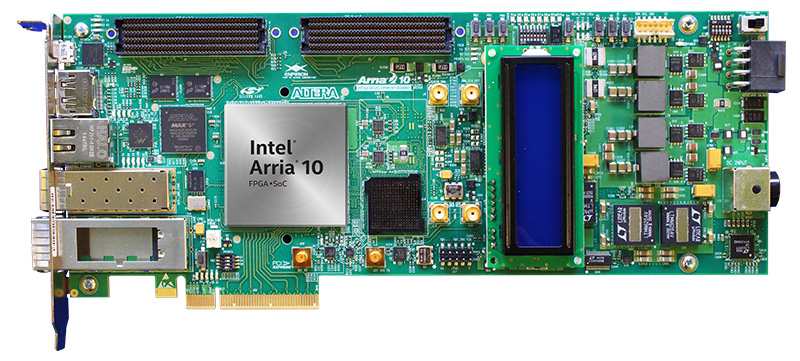

From healthcare to autonomous driving, Deep Neural Networks (DNN) dominate over the traditional Computer Vision (CV) approaches in terms of accuracy and efficiency. Exponential increase of DNN applications require tremendous computation power of underlying hardware resources. Naturally the superior performance of DNN models comes at a cost of huge memory footprint and complex calculations. Even if Graphics Processing Units (GPU) are the main work-horse during the training of DNNs for their massive computational capabilities, they are not suitable for mobile deployments. Computations in remote cloud is also not suitable for unreliable network latency and potential security issues. The hardware, accelerating the inference at edge should offer flexibility of deployment due to the rapid-changing nature of this research area. Field-programmable Gate Array (FPGA) provides the best trade-off between performance, power-consumption and design flexibility.

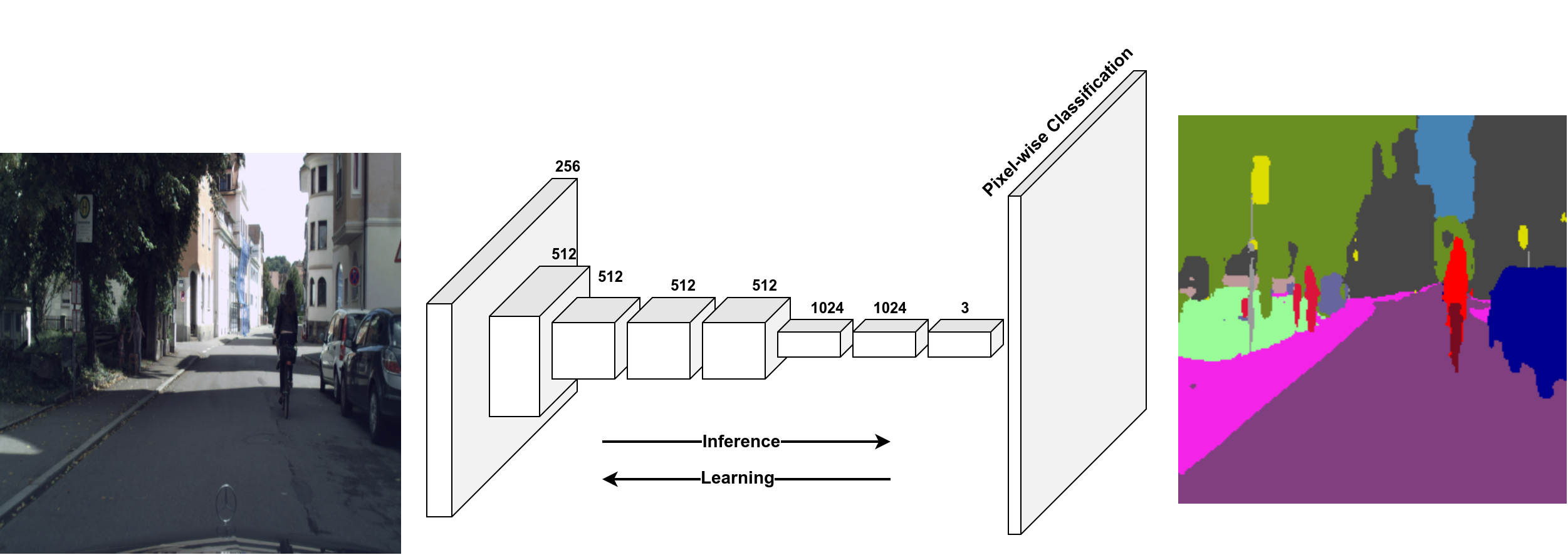

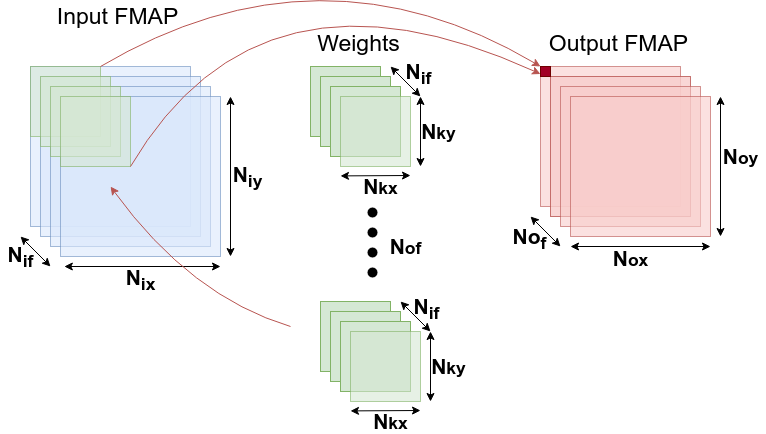

CNN being the de-facto framework for computer vision, semantic image segmentation is one of the most complex tasks providing pixel-wise annotations for complete scene understanding. For a critical application like autonomous driving, DeepLabV3+ model provides the state-of-the-art Mean Intersection-Over-Union (mIOU) on CityScapes dataset. In this work, a fully pipelined hardware accelerator implementing novel dilated convolution was introduced. Using this accelerator, an end-end DeepLabV3+ deployment was possible on FPGA. This architecture exploits hardware optimizations like 3-D loop unrolling, memory tiling to maximize use of computational resources and provides 2.34X latency improvement with respect to the baseline architecture.

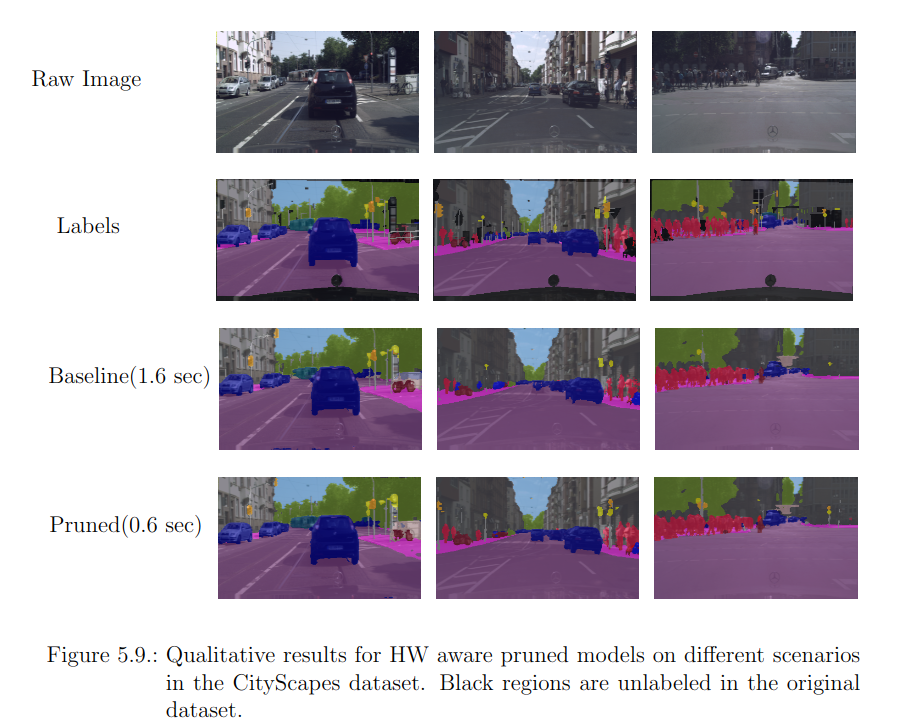

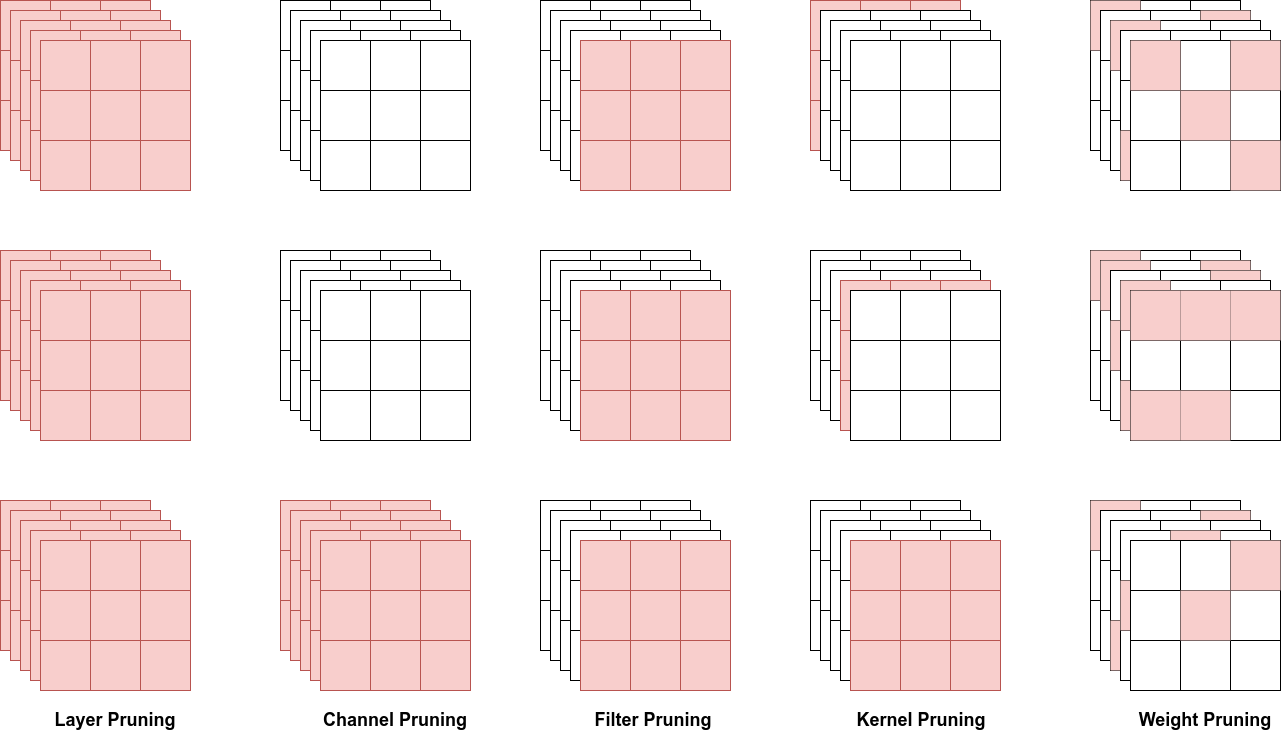

Further, a Genetic Algorithm (GA) based automated channel pruning technique was used to jointly optimize hardware usage and model accuracy. Finally, hardware awareness was incorporated in the pruning search by hardware heuristics and an accurate model of the custom accelerator. Overall a 4X latency improvement was observed for a sacrifice of 4% of mIOU.

The findings and development details of the accelerator has been compiled here and the paper based on this work has recently been published at DAC 2022.